Thinking Fast and Estimating Wrong

Software estimates are fundamentally flawed. I've always intuitively known this, but a year ago, I did a little experiment inside Zapier to prove it. Now when I say "prove," I don't mean full-on lab coats, large population, double-blind, scientific study. So please don't beat me up too much about my methodology. I do challenge you to do the same experiment with a similar or larger sample size and find contradictory results. As long as your methodology isn't completely broken, I'd stake a pretty large sum of money on you seeing similar results. Software estimation is flawed because our brains are fundamentally flawed. That may seem obvious, but the specifics can be surprising. Even worse, your brain will keep churning out bad estimates despite knowing how bad it is at estimation.

Your Brain Is Broken

Before I get to the experiment, let's talk about possibly my favorite book ever, by Daniel Kahneman, Thinking Fast and Slow:

Allow me to summarize the book for you:

Your brain has two systems of thinking. One system is fast, but it can't be trusted. The second system is slow and can be accurate, but it's also lazy and happily trusts the other system most of the time.

And that's pretty much it. There, I saved you a lot of time.

Actually, I highly recommend reading the book for yourself. But it's not exactly a quick read (or at least it wasn't for me). And it's sort of depressing. Lots of statistical reasoning and countless examples of how your brain is broken and supplying you with an endless stream of bad decisions that it believes are good decisions.

Even if you haven't read the book, we can try out these systems right now. First, let's try System 1, the fast system.

What's 2 + 2?

That's a good feeling, isn't it? The answer just popped into your head. You probably rewarded yourself with a tiny dopamine hit.

Okay, now let's try System 2, the slow system.

What's 17 * 24?

That's not even complex math, but you immediately get a tired sensation. Or you think "I could do that, but what's in it for me?" You could do it in your head, but it requires the gears to turn, and you are not going to turn those gears simply because some random person on the internet told you to.

If you want the full effect of System 2, go ahead and do the problem in your head. Or you can just trust me and keep going. Most people will just keep going. And that's thanks to evolution.

The first problem uses the simple animal parts of your brain, and those parts of your brain don't require a lot of glucose. The second problem uses the advanced human parts of your brain, like the prefrontal cortex, and those parts use lots of glucose. Thanks to evolution and your desire to survive on limited amounts of food, you'd prefer to use System 1 and save energy. And you'd prefer to avoid System 2 unless there's a good reason to involve it. So, for example, I could offer a hundred dollars for you to solve the harder problem, and you'd probably gladly start doing the problem.

System 1 isn't all bad. It holds all the heuristics, built up from prior experience, that keep us safe and help us work quickly. The car ahead is stopping, so hit the brakes! This download link looks suspicious, so I'll avoid it. I've seen this problem before, so I can quickly help this customer so she can be happy again. I've made hundreds of React components like this one, so I can quickly create another one.

When System 1 is right, that's awesome. But System 1 is often happy to provide answers that are completely wrong for particular situations. And lazy System 2 is happy to let that happen.

The Experiment

So let's connect the dots back to our experiment.

I split thirty-five people randomly into two groups. Everybody got a form with this description:

We are planning to implement collaboration features for the editor. For example, if two customers have the same Zap open at the same time, each will immediately see the other's changes. We'll show a list of users currently editing the Zap and what field they're editing. Think Google Docs for editing Zaps.

If you use Zapier and you're getting excited about this feature, sorry there's no planned release date at the time of this post. :-) But hey, if you want to work on cool stuff like this, apply for an engineering job! Anyway…

Everyone was asked two questions about this project. The first, multiple-choice question was a little different for each group, but I'll wait to show you that one. The second, open-ended question was:

How many weeks do you think this will take?

The average results for this question were:

Group A: 10.7 weeks

Group B: 21.6 weeks

Whoa, Group B thinks this will take twice as much time! What made them think that? It's a simple matter of priming and replacing the question.

Priming

Group A was first asked:

Do you think it will take more or less than 4 weeks to build this?

Group B was first asked:

Do you think it will take more or less than 16 weeks to build this?

Now, intuitively, you may almost think there's nothing to see here, move along. But that's exactly the problem. Your brain wants to connect these two questions together, but there's nothing binding them. The simple binary question has no connection to the question of how many weeks the project will take. I could have said 4 hours and 16 years, and as wildly out of reason those estimates would be, they would only skew the results further.

The questions should be independent. I should be able to ask for the number of weeks first and then ask the binary question second and get the same results. But that's not how our brains work. The very existence of that number on the page will prime your brain to skew towards that number. I could have asked (and it would be fun for someone to try this):

Do you think it will take more or less than 4 weeks for you to paint your house?

Replacing The Question

Estimating the time to complete a project is actually a really hard problem. It involves underlying questions like this:

- How many people will work on this?

- How much of the work can be parallelized?

- Are we going to do customer research first?

- Are we going to do lots of QA?

- Have the developers done this type of work before?

Estimating software projects is almost laughable, in my opinion, because software is invisible, completely separated from the finished product, and typically uses a foundation of ever-changing materials. We're not talking about houses or roads where you can measure by the foot, and the materials remain the same over decades or even centuries. Software projects are built on top of ever-changing code and devices with invisible abstractions and involve text in files that indirectly move pixels on a screen.

It's worth noting that I'm not talking about projects where someone has already done the same type of project on the same devices using the same modules and the same team, etc., etc. I'm sure those projects exist, and those estimates are reasonably predictable. But I personally can't recall ever being involved in that type of software project.

Because the estimation question I asked:

How many weeks do you think this will take?

Is so difficult to answer, your brain happily substitutes a different question like:

What's a number of weeks that sounds reasonable given the information I have?

In this case, the only information available is:

- The feature description

and - The priming question

So your System 1 goes ¯\(ツ)/¯ (shrug emoji) and throws out an answer. And System 2 is more than happy to reserve glucose for something more rewarding than estimation, like actually building something!

Other Problems with Software Estimates (and Your Brain)

Priming and replacing the question are just a couple of the ways that your brain fails. I won't go into detail on them all, but following are a few that might also affect estimation.

Regression to the Mean

We have a tendency to recognize "hot streaks" and assume they will continue. In reality, everything usually returns to its normal state. If a baseball player hits a bunch of home runs in a row, you're more likely to bet on that player. If Apple has a few incredibly successful quarters in a row, you're more likely to invest in them. In reality, the baseball player will almost always return to his normal batting average. Apple will tend to return to its historical growth.

If you've been lucky picking useful JavaScript libraries for the frontend or AWS has been rock solid and trouble-free, you'll tend to estimate lower.

Framing

Would you rather do an experimental surgery if you have a 99% chance of living or a 1% chance of dying?

More people will choose the former option than the latter, even though they're the same thing! The same thing can happen with estimation. Which of these projects will take longer?

With this new product launch, there's a 95% chance we can stay in business.

With this new product launch, there's a 5% chance we go out of business.

The latter sounds a lot more negative even though they are identical statements. You're likely to be a lot more careful estimating against going out of business.

Trusting "Experts"

If you invest money for retirement (and I hope you do), I hope you either:

- Invest in index funds

or - Get really lucky

Mutual fund managers that actively invest your money unfortunately cannot beat the market in such a way to compensate for their costs. In fact, those actively managed funds could end up costing you 65% of your retirement wealth versus index funds. Active fund managers have failed to beat the market for the last 15 years. But for some reason, those active fund managers make millions of dollars to, on average, lose money. That's because they are considered "experts," and they are trusted to do better than a monkey throwing darts. But the market is inherently unpredictable, so in actuality, the lower fee monkey is a better value.

There are true experts in some fields. For example, a heart surgeon that has performed the same bypass surgery hundreds of times is probably a valid expert on bypass surgery. But even then, we often trust heart surgeons to give us advice on brain surgery, or even worse, totally unrelated fields.

So when Elon Musk shows up to estimate your new feature, should his estimate be trusted? Okay, it is Elon Musk… hmm, I might be tempted in that case. But generally speaking, building a new thing is inherently unpredictable, and a monkey could probably do just as well.

That's probably another factor at play in the 4-week vs 16-week priming question. When some people see that number, they assume the author of the question (me in this case) had put that number there because it was significant.

WYSIATI

All of the failures mentioned (and lots more) can be boiled down to a single concept:

What you see is all there is.

Or WYSIATI. Your brain will always prefer to take the information right in front of it and use that to arrive at the quickest possible answer.

WYSIATI is particularly nefarious for software estimation because, again, it's invisible! When I ask the concrete contractor to estimate a new sidewalk, he can walk from one end of the path to the other and have a pretty good idea of what he's estimating. Estimating all the possible states that your application can end up in is a lot harder.

Now What?

So from now on, if someone asks for an estimate, you can just send them this link, right? No more estimates!!!

Wait a second, not so fast…

To paraphrase Eisenhower: estimates are useless, but estimating is indispensable.

Okay, maybe not quite indispensable, but also not completely useless. Estimates are good for different things.

If you're in the unfortunate position of bidding for a contract, good luck getting the contract with "it will take as much time as it takes." Even if you're working for an awesome company like Zapier where we don't live and die by estimates, they're still useful. At the very least, rough orders of magnitude allow you to make value judgements. If your estimate is one day for something important, that's a no-brainer to get to work. If your estimate is six months for a nice-to-have, it's maybe better to shelve it.

Estimates also help you plan parallel work. Not perfectly, but hopefully better than with no estimation at all. Perhaps most importantly, they simply provide a shared target date that everyone can work towards, and if the date arrives, you can discuss a more accurate date and whether or not it's worth continuing. Hopefully in a calm manner. If that estimate you gave became a do or die commitment, I am deeply sorry for you.

So given that your brain is inherently faulty, how can you make a better time estimate for your project?

Unfortunately, there isn't much you can do to combat the problems laid out here. If you read Fast and Slow, you might, like me, keep waiting for the punchline where Kahneman is going to tell you how to fix your brain. Unfortunately, he does no such cheerleading. He admits that even though he has spent a lifetime researching all of these topics, he still has to watch himself keep making the same mistakes over and over again.

There are some things you can do to help a little though:

Awareness Helps a Little

Just being aware of these two systems of thinking helps somewhat. If you know that you're susceptible to these quirks of brain function, then you you might be able to at least stop a train wreck. If you see yourself replacing a question, take a step back and see if you can pick a slightly better question. You will never get a perfect estimate, but asking better questions will probably give you a slightly better estimate. Remind yourself that any estimate you give is based on what you see, but there is probably a lot that you don't see. Especially since this is software, it's… yes, I'll say it again… invisible! You can't see most of it!

Don't Estimate Immediately

Unless you're in a bidding war for some contract and you have to give an answer right this minute, then there's no reason the person asking for an estimate can't wait a day. So avoid the knee-jerk reaction to respond with an effectively random amount of time when someone asks how long it will take you to build that feature you've never built before. (If you are in some kind of bidding war, I'm sorry, I can't help you much.)

If you answer immediately, you're subject to priming effects. "Do you think you can get this done by next Tuesday?" If someone asks you that, you're now susceptible to just parrot back that date or something close to it. Not to mention that engineers often translate that question into, "Are you awesome enough to get this done by next Tuesday?"

Maybe you haven't eaten lunch yet. So your brain is low on glucose. It's going to lean heavily on heuristics (or priming) instead of actually considering the complexity of the project.

Also, remember WYSIATI: you're stuck with what's in front of you. If you're looking at a slick mockup, maybe it feels like it's already done. How long does it really take to wire up a few buttons? If you don't step away and take in other information, like that rat's nest of code you've been meaning to refactor, then you may think it sounds like a pretty easy job.

Waiting a day to give that estimate will decrease the chance of it being totally useless.

Scorecards

Consider creating simple scorecards or formulas. If you're going to be using heuristics anyway, you might as well make the heuristics better. Something really simple like:

- What is your rough estimate?

- Are there new people involved? Add 50% more time.

- Is this a brand new feature? Double it.

- Does this require a fundamental change in architecture? Double it again.

- Does this require a radical change in architecture? Double it again.

- Are you using unfamiliar technology? Double it again.

You probably should not use that particular scorecard since I just made it up. :-) But it's probably better than just picking a random number. You should consider your own team size and composition, the types of projects you work on, etc. to come up with a scorecard that makes sense. Then you can compare the scorecard to reality and adjust the questions or the numbers. Do not attempt to build a precise estimation instrument, since it's never going to be completely accurate.

In case you're wondering, I'm adapting this from some research Kahneman did evaluating soldiers. He was able to move from a battery of psychological tests that were "completely useless" at predicting performance to a set of 6 characteristics scored 1-5 each that were "moderately useful." Because of that lazy System 2, if you spend exhaustive amounts of time doing estimates, you may actually be less accurate than if you have a simple rubric that relies on intuition. Thanks brain!

Break Projects Up into Smaller Tasks

I feel like I'm restating the glaringly obvious here, but it's something that is often forgotten. And let's relate it back to your brain. Let's break down that math problem from earlier into easier steps:

17 * 24 (24 * 10) + (24 * 5) + (24 * 2) 240 + 120 + 48

Each of those smaller problems is much easier on your brain than the original problem. Just like complex code is easier to comprehend when we break it apart and compose from smaller functions, it's easier to understand complex projects when we break those down into smaller tasks. And as a result, it's easier to estimate the effort required for those tasks.

Checking off those smaller tasks more frequently helps build up trust. If you're off in the weeds for six months, it looks suspiciously similar to doing nothing at all or being incompetent. Personally, I'm not a fan of breaking work into arbitrarily sized fixed-length chunks. But breaking work into the smallest chunk possible allows you to set the soonest possible date where everyone can reconvene, discuss any problems, and make a value judgement on the progress. If the norm is that everyone feels like they're seeing progress, that builds up trust for the exceptions where you might need to spend a little more time.

It's Just Your Brain

Ultimately, don't get too upset about System 1 failures. They're going to happen. It's just evolution at work. If you give an estimate and you're not done because you didn't think about some important details, be honest about the mistake. Talk about the surprises. If you use a scorecard, try to improve it. If you didn't break things down enough, try to find some smaller tasks. Don't get caught up chasing sunk costs. Try to learn from previous estimates compared to actual time spent.

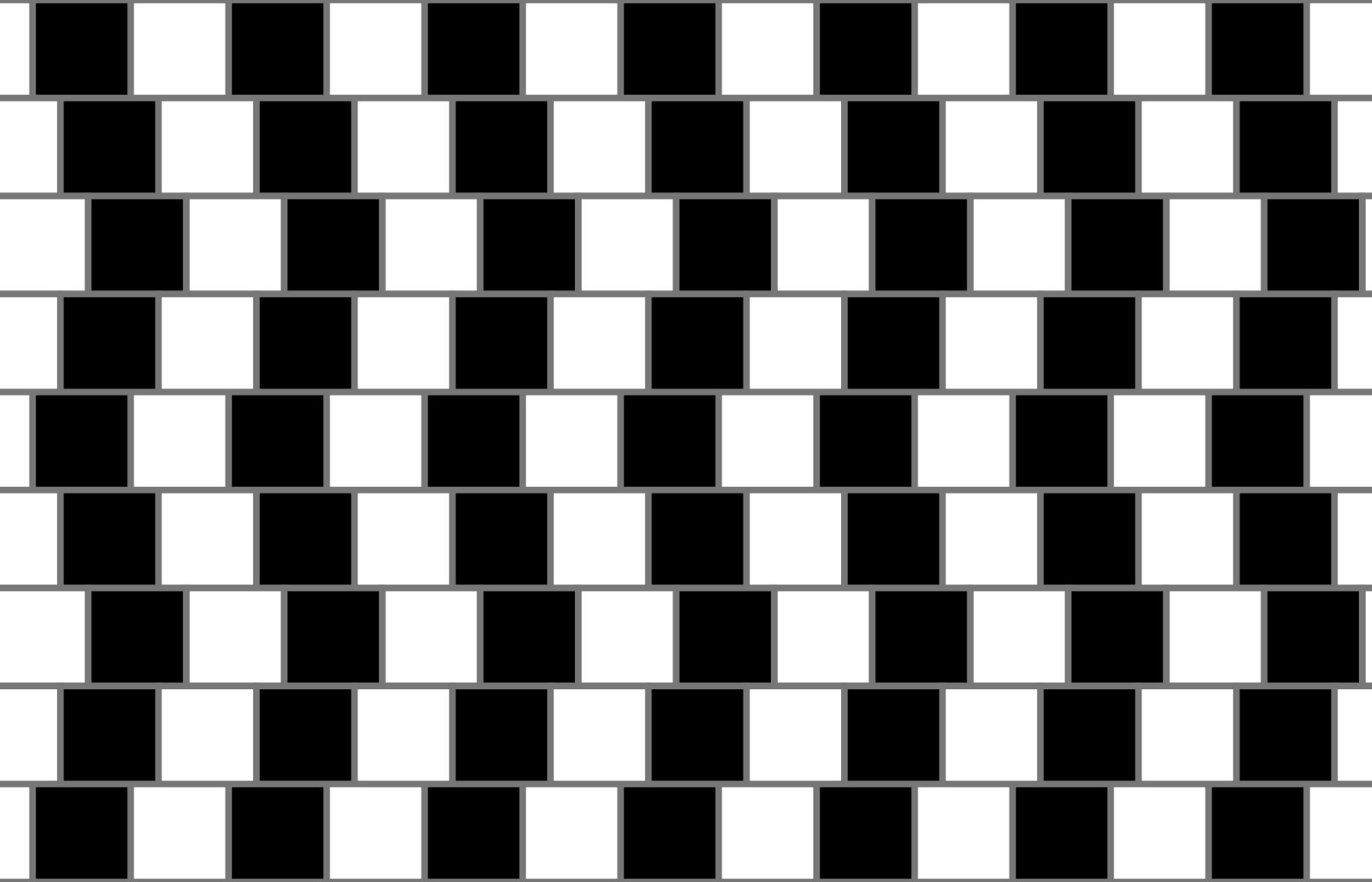

Getting mad about a bad estimate, or any System 1 failure, is like getting mad at thinking these lines are not parallel:

They are parallel. Your brain just refuses to see them that way. Go ahead and hold a straight edge up to the lines. But no matter how many times you do that, it’s impossible for you to stop seeing wavy lines. In the same way, it’s impossible for you to stop giving bad estimates.

(And if someone gets upset about your bad estimate, feel free to point them to this article. I can't guarantee it will calm them down though, since their reaction will likely also be fueled by System 1.)

Comments powered by Disqus